Send Data from Salesforce to Data Cloud using Ingestion API and Flow

As part of this blog post, we are going to see a Sprint 24 feature - Send Data to Data Cloud using Flows and Ingestion API. The release note is available here.

Introduction

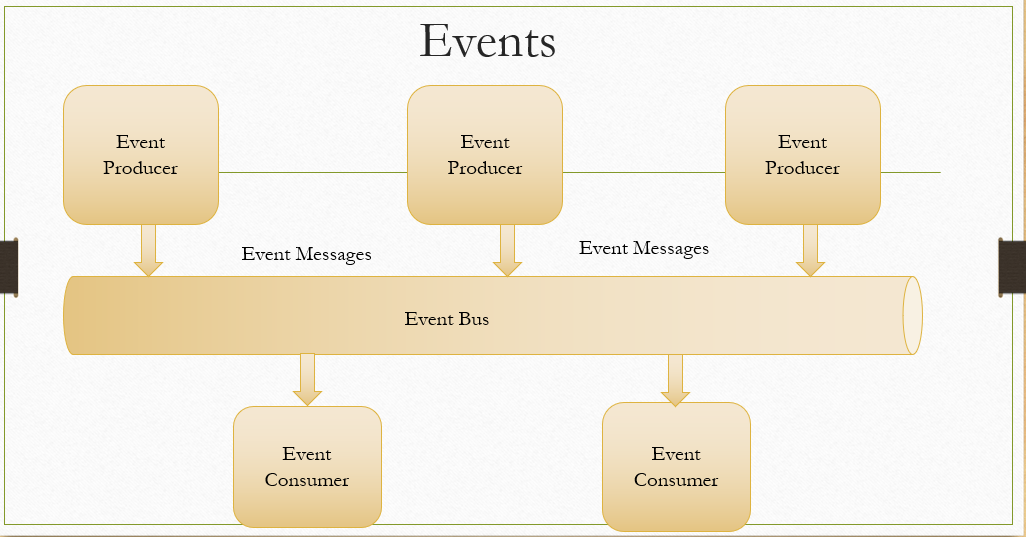

As we all know, Data Cloud helps us to build a unique view of customers by harmonizing data from multiple source systems and coming up with meaningful data segmentation and insights that can be used in different platforms for additional processing.

If you are new to Data Cloud refer to Salesforce documentation or these videos that are part of Salesforce Developers youtube channel.

What is Ingestion API

As per Salesforce documentation, Ingestion API is a REST API and offers two interaction patterns: bulk and streaming. The streaming pattern accepts incremental updates to a dataset as those changes are captured, while the bulk pattern accepts CSV files in cases where data syncs occur periodically. The same data stream can accept data from the streaming and the bulk interaction.

This Ingestion API will be helpful to send interaction-based data to Data Cloud in near real-time and

this is getting processed every 15 minutes.

Use Case

When external users are submitting donations from a public site, the donation details need to be sent to Data Cloud for segmentation and creating insights.

Implementation Details

1. Create a Donation custom Object

We can create a custom object in Salesforce with minimum details like:

- Name

- Category

- Donation Amount

2. Create a screen flow to input donation details

Add screen elements to input the above 3 variables and create a record once the values are entered.

3. Add this flow component to a Public site/ Salesforce page

Normally donations are executed from the experience cloud site. But for test purposes let us add this to the internal home page.

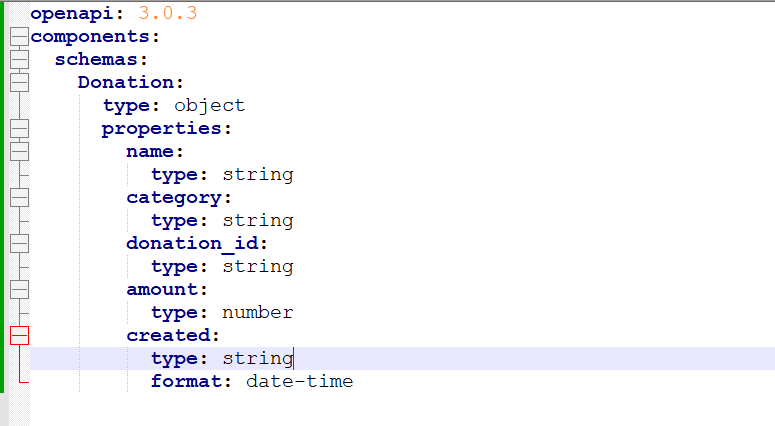

4. Create Ingestion API Connector and upload the Schema

From Data Cloud Set up screen, create a new Ingestion API Connector

- Click on New Button from Data Cloud setup->Ingestion API Connector

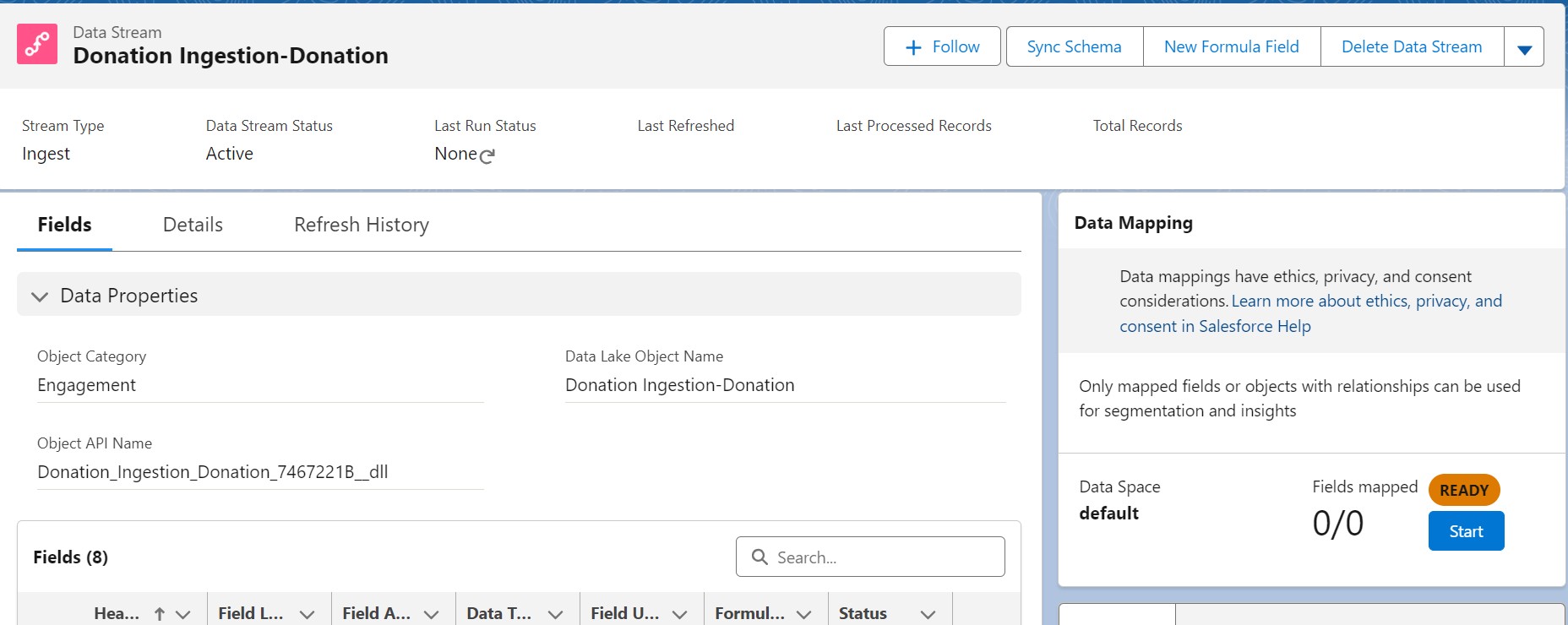

5. Create Data Stream from Ingestion API

Data streams in the Data Cloud allow you to ingest data to the Data Cloud from Salesforce and other data sources. To ingest data to the Data Cloud using the Donation_Ingestion API that we just created, a data stream needs to be defined as shown below:

- Create new Data Stream

- Select the source for the Data Stream. In our case it is Ingestion API

- Select the newly created Donation Ingestion API

- Configure the selected object and field details as shown below:

- Deploy the Data Stream

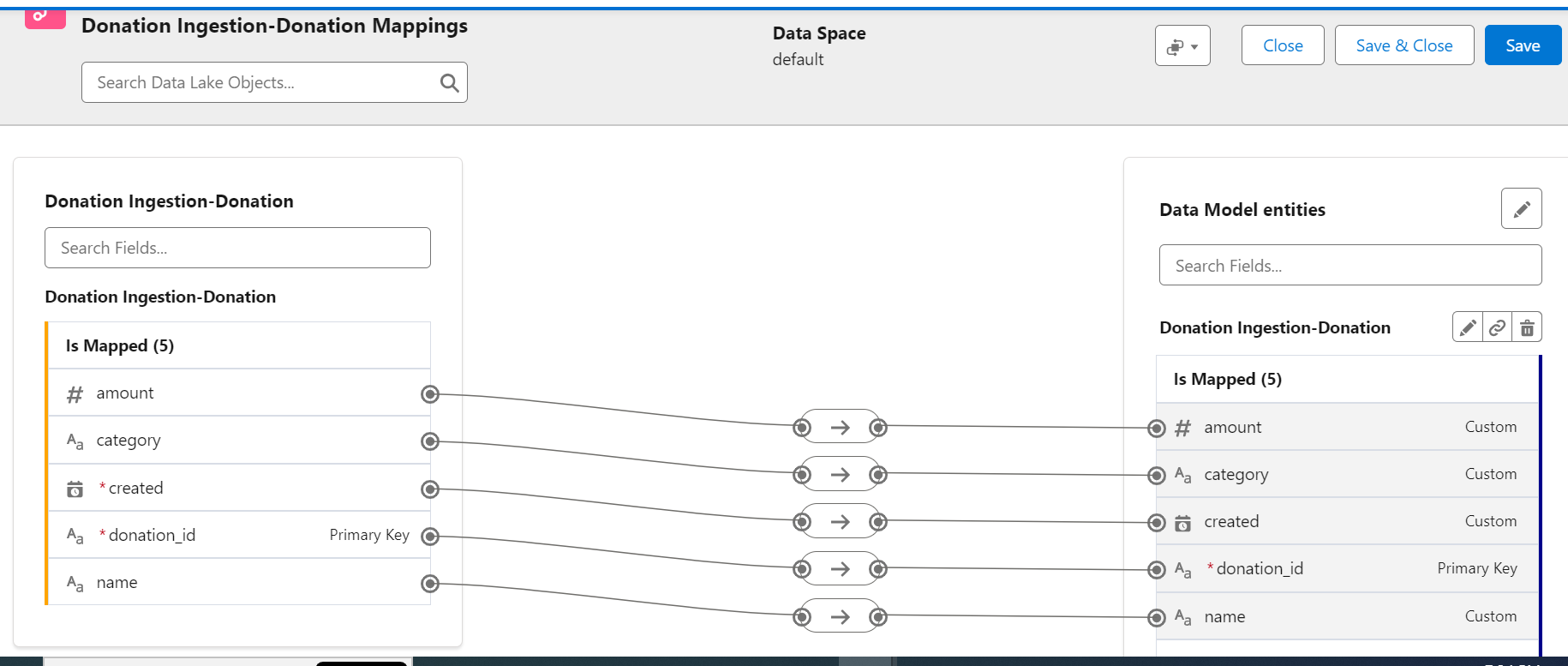

For additional processing of these ingested data, they need to be mapped to Data Model objects in Data Cloud. A single Data Lake Object can be mapped to one or more standard/custom Data model objects. After that, all additional processing can be applied to this data mapped to Data Model Objects.

In our case, we are going to create a custom Data Model Object and do the mapping.

- Start Data Mapping and click on Select Objects

- Select the Custom Data Model Tab and click on the new Custom Object

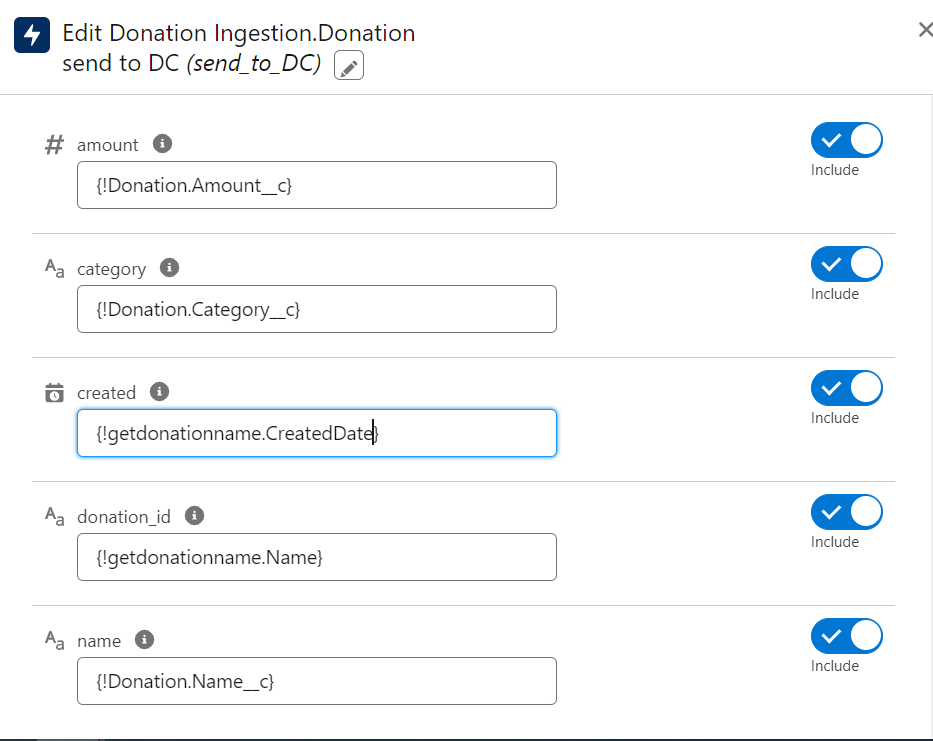

As per the Sprint 24 feature, once an Ingestion API connector has been successfully created, it will be available by default as an action in the Screen flow/ record triggered flow.

In record triggered flow this action can be added using the scheduled path only, since this is a callout operation

- Modify flow to retrieve new Donation record name and created date

- Add a new Action in the screen flow and select "Send to Data Cloud" from the category

- Newly created Ingestion API - Donation Ingestion, will be available by default

- Do all the input variable mapping

- Open the screen flow and enter the details

- Record created successfully

- In Data Cloud App, Go to Data Explorer Tab and check Data Lake Object - Donation. We can see that the new record created in Salesforce got synched with Data Cloud now:

Hi

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis post is a brilliant resource for anyone working with cloud data! Meera’s detailed explanation of using Salesforce integration tools for data ingestion is both informative and practical. Her insights are sure to help many data professionals. Well done, Meera!

ReplyDeleteText messaging on Salesforce allows users to send and receive SMS messages directly from the Salesforce platform. This feature helps streamline communication with customers and leads by integrating text messaging into Salesforce workflows. https://360smsapp.com/salesforce-texting/

ReplyDeleteVery Well Explained Meera.

ReplyDeletehttps://ayaninsights.com/guestblogs/demystifying-salesforce-ingestion-api-and-its-features/

Salesforce consulting services

ReplyDeleteReally helpful breakdown of sending Salesforce data to Data Cloud using the Ingestion API and Flows! It’s impressive how much easier this Sprint 24 feature makes syncing records in near real-time. For anyone looking into optimizing their cloud setup while implementing these flows, this guide on modernizing cloud architecture has some useful insights that complement these Salesforce capabilities.

ReplyDelete